AI SIEM Global Roadmap

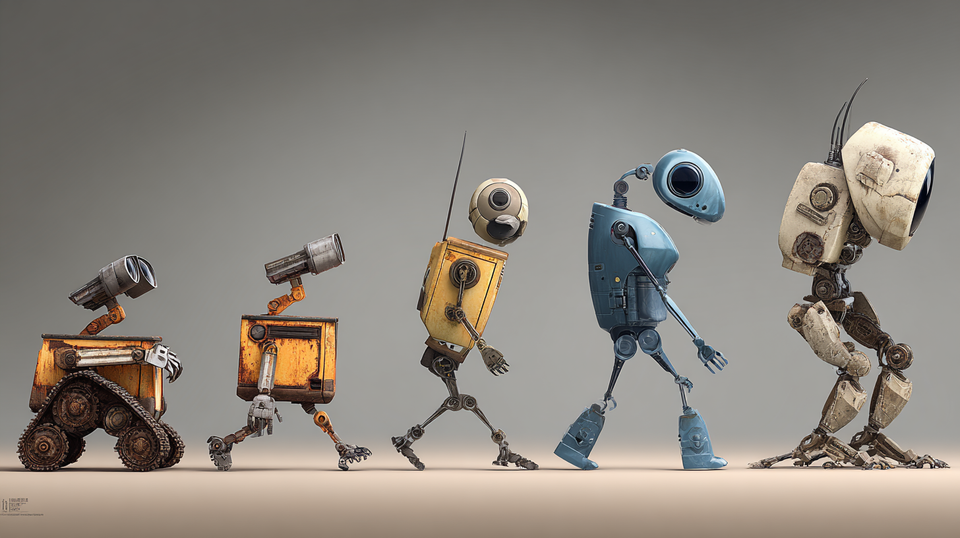

The Global AI SOC roadmap defines that progression as five distinct phases, Atomic, Role-based, Process-based, Action-oriented, and Self-learning, and clarifies how each phase sets the stage for the next.

The security operations industry is entering a pivotal era where artificial intelligence is no longer an experimental add-on but a foundational component in how organizations detect, investigate, and respond to threats. Across vendors, there is a clear consensus on the need to either enhance or fully replace first-tier and second-tier analysts, as well as elements of response teams, to address three pressing realities:

- Volume of data – SOC teams face an unprecedented stream of telemetry and alerts that cannot be processed at human scale.

- Need for speed – Attackers move quickly, and latency in triage or investigation directly increases risk.

- Need for quality – Often overlooked in marketing, but in practice, quality is a decisive factor. A repetitive process, once well-vetted and tuned, produces more accurate results, reduces noise, and improves downstream decision-making.

To achieve consistent quality at scale, AI-driven SOC platforms must follow a sequential path. Each phase builds upon the fundamentals established in the one before it. You cannot implement self-learning if you lack reliable action-oriented workflows, just as you cannot execute safe actions without mature, well-designed process orchestration. This roadmap defines that progression as five distinct phases, Atomic, Role-based, Process-based, Action-oriented, and Self-learning, and clarifies how each phase sets the stage for the next.

In the first section, we define these phases in detail. In the second, we assess where the leading vendors currently stand against this roadmap, using only consistent, production-proven capabilities, not selective or marketing-driven claims. Finally, we look at the stumbling blocks that make automated SOCs just out of reach.

Phases of AI

While there are multiple public frameworks describing the progression of “agentic AI” capabilities, the phases outlined here are a consolidation and simplification of the most relevant industry models. These include Microsoft’s Agentic Maturity Model, Lucidworks’ four-level structure, the Architecture & Governance five-level approach, and well-cited research patterns such as CAMEL role-play agents, AutoGen multi-agent conversations, and ReAct plan-and-execute workflows. Each of these frameworks contributes useful perspectives, but none are written specifically for the demands of SOC operations. The Path to Self-Learning Systems presented here serves as a practical, SOC-focused maturity model for Generative AI, defining the sequential capabilities required to progress from simple, single-model assistance to fully autonomous, self-improving security platforms.

Table 1 – Path to Self-Learning Systems

| Phase | Definition | What Defines Completion of this Phase |

|---|---|---|

| 1. Atomic | A single AI model operating over a fixed dataset or narrow query scope, performing tasks like summarization, enrichment, and answering questions. No orchestration, no state tracking, and no changes to the system itself. |

* Model produces reliable results on known tasks without human reformatting. * Consistently answers queries or summarizes events within its dataset. * No dependency on multi-agent coordination or process flow. |

| 2. Role-Based | Multiple AI agents, each operating with a specific “role” or “persona” (e.g., Tier 1 Analyst, Incident Responder). These roles follow predefined prompts and perspectives but still act in isolation. |

* Distinct agent prompts produce role-specific outputs. * Roles can hand off to each other in sequence. * Each role’s scope and responsibilities are clearly defined and repeatable. |

| 3. Process-Based | Explicit workflows are defined and executed; classification leads to specialized flows (e.g., impossible travel, anonymization checks). Workflow logic is central, not ad hoc agent conversation. |

* All major investigative and enrichment tasks are represented in documented workflows. * Workflows can run without manual intervention once triggered. Processes consistently produce complete, scoped outputs ready for review or action. |

| 4. Action-Oriented | The system can execute operational changes (e.g., isolate host, disable account) based on workflow outputs, with appropriate guardrails, approvals, and logging. |

* Actions can be triggered directly from workflow results. * Guardrails and approval mechanisms are in place. * All actions are logged, reversible, and subject to audit. |

| 5. Self-Learning | The system evaluates its own outputs, compares them to desired outcomes, and adjusts prompts, workflows, and tools accordingly, without needing full manual reprogramming. |

* Continuous QA loop that measures performance and correctness. * System can autonomously adjust workflow parameters or prompt instructions. * Changes are tracked and reviewed under governance policy. |

In addition to understanding the levels of maturity, it is important to define a few key terms that frame how these capabilities are delivered in practice.

Copilot vs. Autopilot

- Copilot – Human-initiated. The analyst prompts the system, which then responds. May include follow-up suggestions, but the system does not start workflows on its own.

- Autopilot – System-initiated. Multi-step workflows begin automatically based on triggers or detections, can run end-to-end, and may include human approval before certain actions.

Scope Categories

- Own Products – Works only with the vendor’s native telemetry, detections, and tools. No external data sources or actions without manual integration.

- Walled Garden – Works with the vendor’s products plus selected partner integrations. Still bound to vendor-defined ecosystem rules and data formats.

- Agnostic – Works across arbitrary, third-party data sources and tools without vendor lock-in. Capable of ingesting, processing, and acting on multi-vendor environments.

Transition to Industry Comparison

With the maturity phases and key terms defined, we can now map leading AI-driven SOC and security platforms against these criteria. In this comparison, we remove product branding and focus on the essentials: which phase they consistently operate at today, whether their operation mode is copilot or autopilot by our strict definitions, and their scope (owned products, walled garden, or agnostic). This avoids marketing overstatement and provides a clear, operational view of the industry landscape.

Table 2 – Industry Comparison by Phase, Mode, and Scope

| Vendor | Phase (1–5) | Mode | Scope |

|---|---|---|---|

| Fluency Assist | 3 → 4 | Autopilot | Agnostic |

| Microsoft (Security Copilot / Agents) | 3 → 4 (preview) | Copilot | Walled Garden |

| CrowdStrike (Charlotte AI) | 3 → 4 | Copilot | Walled Garden |

| SentinelOne (Purple AI) | 3 → 4 | Copilot | Own Products |

| Elastic (AI Assistant) | 2 → 3 | Copilot | Agnostic |

| Sumo Logic (Mo Copilot) | 1 → 2 | Copilot | Agnostic |

| Command Zero | 3 (aiming 4) | Autopilot | Agnostic |

| Bricklayer AI | 2 → 3 (vision to 4) | Planned Autopilot | Agnostic |

In summary, the table reveals a clear pattern: human-in-the-loop “copilot” modes dominate the market. The majority of vendors remain reluctant to remove the analyst from the operational loop, positioning their AI as an enhancement tool rather than a replacement. This approach may improve analyst efficiency, but it falls short of the scalability implied by much of the market hype. Another trend is that most major vendors operate in a walled garden or product-only scope, limiting automation to their own ecosystem or a select set of partners. By contrast, traditional agnostic SIEM platforms tend to maintain broader, vendor-neutral integration, enabling more diverse data analysis but often without the same depth of native action capabilities.

Stumbling Block #1 – Volume-to-Cost and the Lack of True SIEM Processes

The first major obstacle to delivering full, autonomous AI in SOC operations is the volume-to-cost problem. AI models operate on tokens, and without a mechanism to reduce the volume of data before it reaches the AI layer, running large-scale analysis quickly becomes prohibitively expensive. It is neither technically nor economically viable to feed every single event and alert directly into an AI and evaluate them independently.

A mature SOC platform must include a down-selection mechanism that determines when to trigger AI evaluation. This means being able to cluster events, grouping related alerts and telemetry into meaningful investigative units, so that AI focuses on the right problems at the right time. The trigger logic and clustering capability are as critical as the AI itself.

Many current products focus heavily on user interface improvements and fast searching, but lack foundational SIEM processes. They behave more like data lakes or general-purpose search platforms than like systems designed to drive investigations. Without native clustering, down-selection, or event correlation, these platforms cannot independently decide when and what to investigate. The burden remains on the human analyst to determine investigation points, which fundamentally undermines the value of automation. True automation should expand the net of what gets evaluated, not shrink it due to tooling limits.

The issue is compounded in platforms that are not SIEMs at all. Vendors like BrickLayer and Command Zero often attach their AI workflows downstream of another platform’s alerts. They rely entirely on the upstream SIEM (e.g., Splunk, Fluency, or other data lakes) to decide what is worth investigating. This means they bypass the hardest part of autonomous security operations: deciding which events merit investigation in the first place. By outsourcing that decision, they avoid the cost and complexity of clustering and down-selection, but also inherit the cost problem when they need to cast a wider net.

The reality is straightforward: if there is too much data for a human to process, there is too much for an AI to process blindly as well. Without robust event clustering, correlation, and reduction at the front of the pipeline, the cost curve makes full automation impractical. This is where products like Fluency stand apart, with a complete infrastructure that includes User and Entity Behavior Analytics (UEBA) to cluster events, reduce volume, and deliver a smaller, higher-quality set of investigations. This lowers token usage, reduces costs, and places the platform in a much stronger position to execute high-quality automated investigations.

Stumbling Block #2 – Lack of Defined, Consistent Operational Processes

A second major barrier to advancing AI-SIEM maturity is the absence of well-defined, consistent processes. In many current implementations, the workflow logic is effectively being left for the AI to invent on the fly. Developers hand an event to an LLM and ask it to “reason through” the problem without providing a fixed, structured process. While this approach may seem flexible, it inherently produces inconsistencies, and in security operations, inconsistency is unacceptable.

Large Language Models are designed to be probabilistic. By default, they do not always produce the same answer for the same input. In investigative workflows, we want exactly the opposite: deterministic outputs for a given set of conditions. This means LLM temperature should be set to zero, and the reasoning steps must be pre-defined in the workflow or embedded directly in the LLM’s system instructions. If the base reasoning is flawed or changes unpredictably, every subsequent decision is compromised.

In mature automation, the LLM is not being asked to invent the investigative process every time, it is executing a known process and applying it to new facts. This also requires maintaining state across multiple analysis steps so that information from one stage is reliably carried forward to the next without distortion. Without this, workflows suffer from process drift, a subtle but damaging problem where the investigation unintentionally changes direction as it moves between steps or between different LLM calls.

Many organizations lack both the expertise and the data comprehension to design and maintain these process definitions. Vendors that do not own a rich, well-curated signature or detection set (e.g., products that rely heavily on community content) are at a further disadvantage. The gap between understanding the data and operationalizing it in a consistent, process-driven LLM workflow is wide, and most early AI investigations remain stuck at or near Phase 1 (Atomic analysis) because of it. Even where multi-step workflows exist, they often amount to loosely chained, isolated LLM calls rather than a coherent, state-preserving process. Without anti-drift measures and robust guardrails, these workflows cannot deliver the reliability required for true automation.

Stumbling Block #3 – Governance and Safety in Action Execution

The final major barrier to advancing AI-SIEM systems is governance, ensuring that the implementation of automated investigation and response is not only effective but secure, compliant, and auditable. Building an AI-driven SOC workflow is challenging enough from a technical standpoint, but doing it under appropriate governance controls adds another layer of complexity that many vendors have not yet addressed.

Transparency of what the system is doing, and the ability for the user to see the AI work is the cornerstone to governance. One cannot treat the AI as a black box.

Security teams cannot simply connect an LLM to their operational tools and let it run. The attack surface expands when an AI is given authority to query sensitive data or initiate actions. The recently disclosed Model Context Protocol (MCP) vulnerability illustrates this risk clearly: connectors and protocol handlers that allow an AI to call external tools can themselves be exploited if not designed with strict parameterization, authentication, and input validation. This means every integration, from a “get user logins” query to an “isolate host” command, must be treated as a potential execution path for an adversary.

Governance also demands clear guardrails:

- Role-based access controls to limit which AI agents can execute certain actions.

- Approval workflows for high-impact changes, such as disabling accounts or modifying firewall rules.

- Immutable audit logs that capture every action taken, who approved it, and why.

- Change management processes for modifying prompts, workflows, or tool configurations so that adjustments are reviewed and tested before deployment.

In addition, regulatory compliance frameworks such as ISO/IEC 42001 (AI management systems) and SOC 2 impose requirements for documenting AI behavior, proving it operates within defined risk tolerances, and ensuring that output cannot cause harm without human oversight. These requirements are especially important in Phase 4 (Action-oriented) and Phase 5 (Self-learning), where the AI is either executing changes in production systems or modifying its own processes.

Without rigorous governance, an AI-SIEM risks turning from an asset into a liability. Even an effective investigation engine can become dangerous if it is capable of taking unreviewed actions or is susceptible to toolchain vulnerabilities. Vendors that treat governance as a core design principle, embedding MCP-style protocols with strict typing, approvals, and identity controls, will be in a much better position to deploy trustworthy automation at scale.

Lessons Learned & Conclusions

Evaluating the current AI-SIEM landscape through the lens of our five-phase roadmap and the identified stumbling blocks reveals several clear trends.

1. Difficulty seeing from an AI-first perspective.

Large, established vendors are deeply tied to their existing codebases, interface designs, and operational philosophies. Their workflows assume that humans initiate and control most processes, which naturally leads to copilot style designs. The idea of AI operating independently, with humans shifting into a quality assurance role, runs counter to how their systems were built and how they imagine their customers work. This is a cultural and architectural barrier, not just a technical one.

2. AI inherits the product’s existing scope.

Vendors that operate in a walled garden or product-only ecosystem replicate that limitation in their AI. A product-centric platform begets a product-centric AI; a walled garden platform begets a walled garden AI. Scope is rarely broadened when AI is introduced, it’s more often narrowed to reinforce the existing ecosystem.

3. Governance constraints shape capability.

AI security is still immature, and governance standards like ISO/IEC 42001 are only beginning to be addressed in the security space. Few companies outside of Microsoft and Fluency openly discuss these standards.

4. The gap between marketing and operational reality.

Without a shared framework like this roadmap, most market comparisons devolve into marketing claims. Even AI-driven analysis, when asked to compare products, often reflects vendor hype rather than grounded operational capability. A structured, phase-based model allows us to filter the noise, assess real-world maturity, and identify the capabilities that truly move a SOC toward full automation.

Looking ahead, the next few years will be transformative as more platforms adopt autopilot-oriented designs and broaden their scope toward agnostic, multi-vendor integration. This shift will fundamentally change SOC operations, moving human analysts from the center of every process to oversight and QA of automated investigations. Having a clear global roadmap enables both vendors and buyers to measure progress, set realistic expectations, and invest in the capabilities that matter most for long-term resilience.