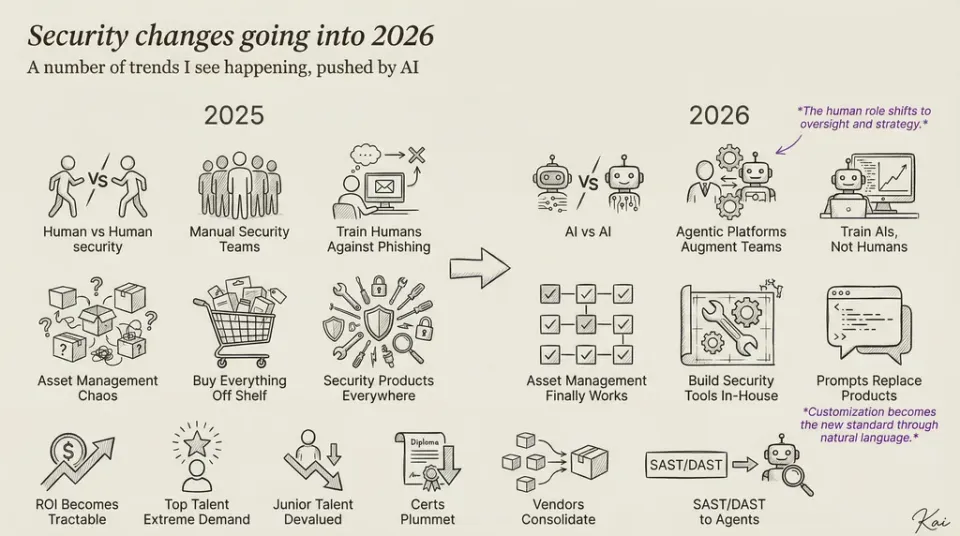

Do we use AI to defend from AI?

AI-assisted attacks are not yet being uniquely defended by AI-aware systems. Most defensive tools using AI are blind to whether the adversary used AI at all. So what’s really happening beneath the surface?

Introduction: The Marketing Mismatch

In today’s cybersecurity landscape, it’s common to see AI-powered attacks and AI-powered defenses packaged together as two sides of the same coin. Vendors frame this as an “AI arms race”—as if every threat is matched by a purpose-built AI shield.

But the reality is more nuanced—and less symmetrical.

AI-assisted attacks are not yet being uniquely defended by AI-aware systems. Most defensive tools using AI are blind to whether the adversary used AI at all. So what’s really happening beneath the surface?

Let’s take a closer look at how AI is being used—on both sides of the battlefield.

What AI Is Being Used for in Attacks

AI is giving attackers new advantages in scale, deception, and adaptability. These tools aren’t breaching systems by exploiting flaws in AI—they’re exploiting flaws in people and processes.

Key characteristics:

- Offensive by design: Built using generative or predictive models to deceive or evade.

- Examples:

- Deepfake voice and video to impersonate trusted individuals

- AI-generated phishing emails crafted for persuasion and personalization

- Polymorphic malware that evolves beyond static signatures

- LLM-driven reconnaissance and social engineering

- What they exploit: Human trust, system access controls, content filters—not AI weaknesses

These threats leverage AI not for sophistication in code, but for sophistication in interaction—making them faster, more scalable, and harder to distinguish from legitimate behavior.

What AI Is Being Used for in Defense

Defensive AI is largely focused on making internal operations more efficient. It enhances detection and triage, but it does not fundamentally “recognize” whether an attack itself is AI-generated.

Key characteristics:

- Defensive by design: Built to automate detection, modeling, and triage workflows

- Examples:

- EDRs using ML to baseline behavior and detect anomalies

- LLMs summarizing and clustering alert data for faster analyst review

- Automated workflows in SOC platforms for initial triage

- What they enhance: Speed, scale, and correlation—not specific AI-attribution

In other words, these tools make the defenders more efficient—but they’re not necessarily fighting AI with AI. They are process accelerators, not AI adversaries.

No Symmetric Arms Race—Yet

Despite what some narratives suggest, there’s no strong evidence that AI defense is evolving in direct response to AI threats.

Instead, both sides are moving in parallel—but independently.

- Attackers use AI to boost existing techniques (e.g., phishing, malware obfuscation)

- Defenders use AI to streamline workflows and detection pipelines

For example, a phishing email generated by a language model might succeed because it’s well-written—not because it bypassed an AI detector. Meanwhile, the AI-powered defense might catch it incidentally based on pattern analysis or keyword flags.

Most current defenses are still tuned for traditional detection models. They don’t recognize “this is AI-generated.” That attribution layer doesn’t yet exist in a meaningful way.