Fix Your SIEM Mess

Implementing a streaming data fabric gives organizations a new foundation of control. SIEMs become sticky not because of the technology itself, but because the organization loses control over its data.

Transformation and Efficiency

Implementing a streaming data fabric gives organizations a new foundation of control. SIEMs become sticky not because of the technology itself, but because the organization loses control over its data. The SIEM ends up doing everything: collecting, normalizing, storing, and analyzing, when those functions should be distributed across a proper data management layer. When collection and routing are tied directly to the SIEM, every change becomes painful. Replacing or upgrading the SIEM means rebuilding the entire collection infrastructure from scratch.

The same problem appears when trying to add a data lake. Without a unified data management layer, you end up maintaining two separate infrastructures, one for the SIEM and another for the lake, each with its own filters, its own uptime checks, and its own routing logic. That duplication isn’t strategy; it’s overhead. And when other teams, such as network or operations, need access to that same data, the complexity multiplies.

A data fabric changes that dynamic. It provides a single, independent layer where data can be collected, filtered, and routed before reaching any tool. It becomes an ecosystem of your own design, flexible enough to add, remove, or redirect destinations without disrupting the rest of the environment. That flexibility is what allows modernization to happen incrementally, not as a massive, high-risk overhaul. You can begin controlling your data today while positioning your organization to migrate to new analytics platforms, integrate data lakes, or adopt new SIEMs tomorrow.

And at its core, this all comes down to cost. Every inefficiency in collection, storage, or routing is a form of financial waste. A streaming data fabric doesn’t just modernize the SIEM architecture; it turns the chaos of data management into a structured, efficient system that saves money while enabling smarter decisions across the organization.

If you are wondering how to implement aa streaming data fabric, take a look at Ingext. Ingext provides a collection of features to make implementing and running a streaming fabric easy.

Why Your Organization Most Likely Needs a Streaming Data Fabric

Every organization depends on its SIEM to make sense of massive amounts of data, yet most fail to realize a meaningful return on that investment as they see operations struggle to perform. The recurring problems are clear: alert fatigue, inability to scale, and the cost & quality of the data feeding the system. Problems are directly related to a bad data management approach: inability to scale and cost & quality of data.

By implementing a streaming data fabric, the burden of data that clogs and slows your SIEM can finally be managed. A data fabric addresses data placement and continuation. Placement means that we put notable events in the SIEM, telemetry data in the data lake, and we drop useless data. Continuation is ensuring that are data is constantly flowing. We need to address the monitoring of the data sources. We also need to address the back flow and the congestion that are issues of collection performance and data loss.

In short, a streaming data fabric lifts the weight off your SIEM and your operations. By managing how data moves, it gives your team faster access to the information they need and sharper visibility into what’s happening across the environment. Decisions become quicker, investigations become clearer, and the entire operation feels lighter. The constant drag of volume and delay gives way to focus and flow. Even alert fatigue starts to ease, because the noise begins to separate from what really matters. This isn’t just a technical improvement. It’s an operational one. With a streaming data fabric in place, your SIEM performs better, your analysts work smarter, and your organization finally runs with the clarity it was meant to have. Strangely, all of these improvements reduce cost.

Routing: Putting Data Where It Belongs

The greatest impact to your SIEM’s cost and performance isn’t how much data you collect, it’s where that data goes. Most organizations lose control of costs because they treat all data the same, storing everything in one place and assuming it all has equal value. By pushing every record into the SIEM, we end up paying twice: once to store data that isn’t needed for detection, and again when that same data clogs searches, slows investigations, and makes results harder to interpret.

What’s really happening is a breakdown in routing. When data isn’t placed where it belongs, or isn’t dropped when it’s not needed, the SIEM becomes bloated and inefficient. Analysts and processes waste time searching through noise, storage costs climb, and performance drops.

In a proper structure, there are three primary destinations:

- The SIEM: This is where your high-value, security-relevant data goes. Before sending it, the data fabric often transforms the information, such as normalizing formats, labeling events, or even enriching with additional metadata to make the SIEM more effective.

- The Data Lake: For telemetry data (the dense, high-volume, smaller records), the data fabric routes logs to lower-cost data lakes. These environments are optimized for storage and large-scale analytics, not real-time alerting.

- Nowhere (Dropping): Sometimes, the best route is no route at all. By filtering out redundant or low-value data before it ever reaches storage, a data fabric can reduce SIEM ingestion by 35–40% and move another 95% into cheaper storage tiers.

This approach isn’t focused on collecting less; it’s to collect smarter. By aligning where data is stored with how it’s used, a streaming data fabric delivers both cost savings and clarity. It’s better engineering, not just cheaper storage. With the right routing strategy, your SIEM becomes leaner, faster, and far more effective and your organization finally stops paying for confusion.

Two main statistics support this design:

- About 40% of collected data is not needed.

- When collecting telemetry data, like firewalls, 95% can be stored in a data lake to support investigations and metrics.

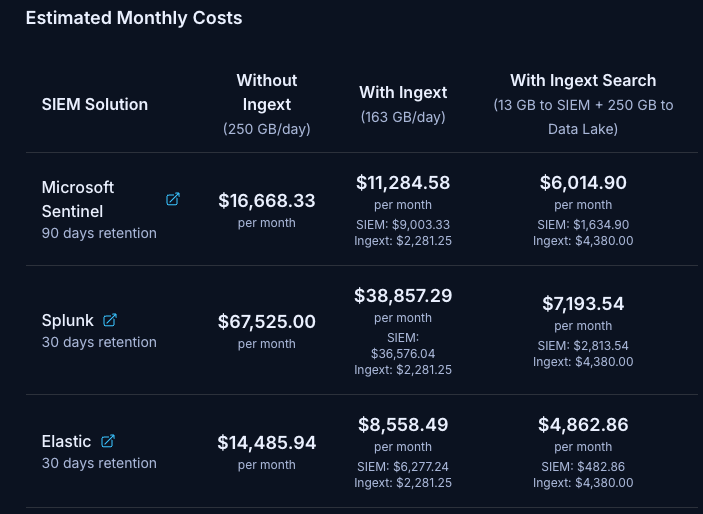

When we look at these two statistics, the cost of SIEMs and the volume of data stored in them, changes drastically.

We are looking at saving up to 80% when implementing a data fabric strategy. Regardless of the SIEM, fabrics are a massive improvement.

Flow Management: Handling Data Continuously

The second reason we use a data fabric is continuity, the assurance that data is always flowing when and where it should. One of the constant worries that makes managing a SIEM difficult is uncertainty: are we still getting the data we think we are? In many environments, the system itself isn’t sure. Feeds drop, sources hang, and data pipelines slow without clear visibility into what’s missing or why. It’s not an easy problem to solve.

Think of it like managing water. We can’t control when or how quickly the rain falls, but we can control how it’s collected and released. A data fabric works the same way, it smooths out the flow by handling congestion (when processing can’t keep up) and backflow (when senders have nowhere to send their logs). It becomes the reservoir between sources and systems, absorbing surges and releasing data at the pace our tools can handle. This is how continuity is achieved: not by slowing the rain, but by building the right channels and reserves to keep everything flowing smoothly.

That means managing three key challenges:

- Congestion: When the sender is ready to transmit data, but the receiver (for example, a SIEM) can’t process it fast enough.

- Backflow: When a system tries to send data but is forced to hold it because there’s nowhere to put it, leading to local storage issues or data loss.

- Uptime and Health: Continuously verifying that sources are alive, ports are open, and logs are flowing properly, like confirming that every device is still mailing its letters to the same shared mailbox.

A strong data fabric monitors all of this automatically. It doesn’t just move data, it ensures that every sender and receiver stays in sync.

Developing a Path to Control

Implementing a streaming data fabric gives organizations a new foundation of control. SIEMs become sticky not because of the technology itself, but because the organization loses control over its data. The SIEM ends up doing everything: collecting, normalizing, storing, and analyzing, when those functions could be distributed across a proper data management layer. When collection and routing are tied directly to the SIEM, every change becomes painful. Replacing or upgrading the SIEM means rebuilding the entire collection infrastructure from scratch.

The same problem appears when trying to add a data lake to offload the telemetry impact to a SIEM. Without a unified data management layer, you end up maintaining two separate infrastructures, one for the SIEM and another for the lake. Each has its own filters, its own uptime checks, and its own routing logic. That duplication isn’t strategy; it’s overhead. And when other teams, such as network or operations, need access to that same data, the complexity multiplies.

A data fabric changes that dynamic. It provides a single, independent layer where data can be collected, filtered, and routed before reaching any tool. It becomes an ecosystem of your own design: flexible enough to add, remove, or redirect destinations without disrupting the rest of the environment. That flexibility is what allows modernization to happen incrementally, not as a massive, high-risk overhaul. You can begin controlling your data today while positioning your organization to migrate to new analytics platforms, integrate data lakes, or adopt new SIEMs tomorrow.

And at its core, this all comes down to cost. Every inefficiency in collection, storage, or routing is a form of financial waste. A streaming data fabric doesn’t just modernize the SIEM architecture; it turns the chaos of data management into a structured, efficient system that saves money while enabling smarter decisions across the organization.