Deskilling. AI is Making Us Dumber.

When professionals depend too heavily on AI systems, the fundamental acts of analysis, interpreting, judging, and deciding, can deteriorate.

The Risk of Cognitive Erosion

Artificial intelligence has rapidly integrated into professions that rely on analytical reasoning. Yet beneath its promise lurks risk. When professionals depend too heavily on AI systems, the fundamental acts of analysis, interpreting, judging, and deciding, can deteriorate. This phenomenon, known as deskilling, has emerged repeatedly across industries where human expertise was once honed through constant practice. The danger isn't that AI performs poorly, but rather that over time, it may dull the critical thinking and observational skills of the professionals who rely on it.

Doctors Losing Diagnostic Edge

A recent observational study published in The Lancet Gastroenterology & Hepatology found that doctors who routinely used AI assistance during colonoscopies became less effective when performing the procedure without AI help. After AI was introduced across four endoscopy centers in Poland, the adenoma detection rate for non-AI-assisted procedures dropped from 28.4 percent to 22.4 percent: a 6-point absolute decline and roughly a 20 percent relative reduction in detection capability.

Experts identified this as clear evidence of a deskilling effect. One researcher compared it to the "Google Maps effect," where people lose their natural navigation abilities by relying on app-provided directions. Consultant gastroenterologist Omer Ahmad warned that even a one percent (1%) drop in detection rates could significantly affect colorectal cancer outcomes, making this substantial decline particularly alarming.

The study also challenged findings from previous clinical trials that reported higher adenoma detection rates with AI assistance. It suggested these trials likely underestimated AI's true deskilling effect because they failed to account for how continuous AI use impairs unassisted performance.

These findings highlight the urgent need for AI deployment strategies that preserve human diagnostic skills. One key recommendation is implementing regular periods of AI-free practice to protect against overdependence—especially important considering these AI systems may degrade or misfire over time.

UX Design — Cognitive Offloading and Erosion of Critical Skills

The risk of deskilling is not limited to medicine. A recent study on user experience (UX) design highlights a similar pattern in another professional field. Researchers found that generative AI was increasingly used to handle routine design tasks such as prototyping, usability testing, and even elements of research. While this brought efficiency, many practitioners expressed concern that they were beginning to offload too much of the cognitive work to the system. The study described this process as cognitive offloading, where professionals let the AI do the thinking for them.

The consequence, as the researchers noted, was a gradual erosion of critical skills. Designers reported that tasks once central to their expertise, such as detecting bias in data, developing rationale for design decisions, and maintaining contextual awareness, were at risk of being diminished. In some cases, the responsibility for outcomes was even blurred, with professionals deferring to the system’s choices rather than owning the reasoning themselves. One participant summed up the risk bluntly, warning that relying on AI without deeper reflection leads to results that cannot be justified if challenged.

When placed alongside the findings from medicine, the pattern becomes clearer. In both cases, highly skilled professionals saw the sharpness of their analysis decline as they offloaded more of the mental work to AI. This points to a broader concern: as we increasingly allow machines to carry the burden of interpretation, we weaken our own ability to weigh, evaluate, and decide. The danger is not simply that people forget facts or lose motor habits, but that the very process of critical thinking, the act of making sense of complex information, atrophies when it is no longer exercised.

A Cross-Industry Warning

The evidence from medicine and UX design reveals a striking pattern. Despite working in vastly different fields, both professions show how AI integration delivers efficiency while simultaneously eroding human analytical capacity. Doctors became less capable of identifying cancerous growths after becoming reliant on AI-assisted detection. Similarly, designers lost their critical reasoning edge when they delegated research and prototyping to AI systems. These professional skills cannot be reduced to simple memorization or mechanical actions. Both fields rely on judgment, interpretation, and contextual reasoning, precisely the abilities that deteriorate when thinking is outsourced to machines.

This cross-industry evidence serves as a cautionary tale that reaches far beyond medicine and design. The real threat isn't the immediate efficiency AI provides, but rather the subtle deterioration of expertise that occurs when professionals no longer deeply engage with their work.

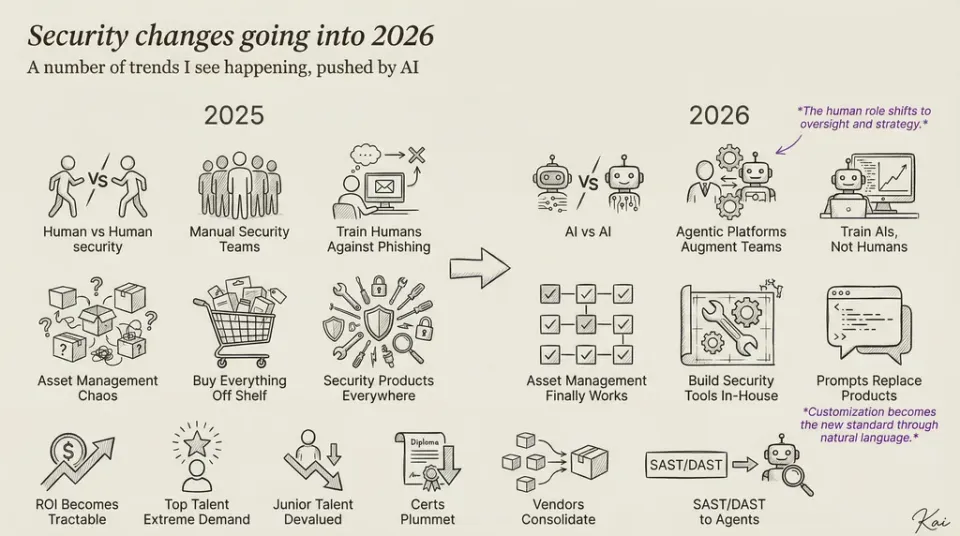

Application to Cybersecurity

The most common use of AI in cybersecurity today isn't replacing analysts but helping them interact with data. Modern tools simplify queries, locate information across tables, and present results intuitively. Rather than writing SQL calls or Lucene queries, analysts can now ask an AI system to find what they need using plain language. The system produces the query, identifies where the data resides, and structures the response appropriately. This accessibility lowers the barrier to entry for those unfamiliar with complex data formats.

The drawback is the gradual erosion of skills among those who once built and refined queries themselves. Just as we no longer memorize phone numbers because our devices do it for us, analysts may lose their grasp of UIDs, field mappings, and the underlying logic of data representation and storage. As the system handles these technical details, this foundational knowledge becomes less practiced. What's lost is a deeper understanding of how systems operate, knowledge that becomes critical when unexpected situations arise.

An even more concerning development is emerging. AI systems are increasingly taking on responsibilities once handled by Tier 1 and Tier 2 analysts, including alert validation, triage, phishing classification, and preliminary scoping. As this shift progresses, the erosion extends beyond data structure knowledge into the core of analytical practice itself. When analysts no longer directly engage in interpreting and correlating data, they lose both the mechanics and the investigative mindset essential to their work.

This pattern isn't unique to AI. Security orchestration and response platforms (SOARs) demonstrate the same effect. Analysts frequently implement pre-built playbooks, signatures, and rules instead of working through the underlying reasoning these tools encode. While the overwhelming volume of data makes this reliance understandable, it strips away the cognitive development that comes from building analyses step by step. The risk isn't merely falling behind the growing volume of information, but losing the critical thinking habits that define effective analysis.

Conclusion

Deskilling is not a possibility to be guarded against, it is an inevitability that has appeared repeatedly as technology advances. We can see it clearly in everyday life. Mechanics who once relied on sound, feel, and experience to diagnose a car engine now rely on digital diagnostic tools. The work is faster and often more accurate, but the craft of manual evaluation has faded. This progression is normal, yet it raises a sharper concern when applied to fields that depend on cognitive reasoning rather than mechanical tasks.

In cybersecurity, the issue is not just that tools perform routine work more efficiently. The deeper risk is that as AI systems take over query construction, triage, and preliminary analysis, professionals begin to lose the underlying understanding of how systems function. The more we rely on AI to interpret events, the less we practice the reasoning required to question or validate those interpretations. Eventually the ability to critically evaluate outcomes may erode, leaving analysts dependent on results they cannot fully explain.

The lesson from medicine, design, and countless other professions is that this pattern will occur in cybersecurity as well. The real question is not whether deskilling will happen, but which skills we are prepared to see diminished, and which forms of cognitive reasoning must be protected. Preserving those skills will require deliberate choices in how AI is deployed, ensuring that humans remain engaged in the act of analysis even as the tools around them grow more capable.