The Rare “2026 Cyber Prediction Blog” thats good

Daniel Miessler wrote one that genuinely stood out, "Cybersecurity Changes I Expect in 2026: My thoughts on what's coming for Cybersecurity in 2026". I read it first thing in the morning, which says a lot because I am usually at my most skeptical then.

I normally do not like prediction blogs.

Every year around this time, my inbox fills up with “What this year has in store” takes. Most of them are just a rehash of what people are saying now. So when Charles Senne sent me a link to a prediction-style post recently, my expectations were low. Not because it was Charles, but it was a prediction blog.

That said, Daniel Miessler wrote one that genuinely stood out, "Cybersecurity Changes I Expect in 2026: My thoughts on what's coming for Cybersecurity in 2026". I read it first thing in the morning, which says a lot because I am usually at my most skeptical then. My honest reaction was, “This is actually pretty good. I should buy this guy a drink.”

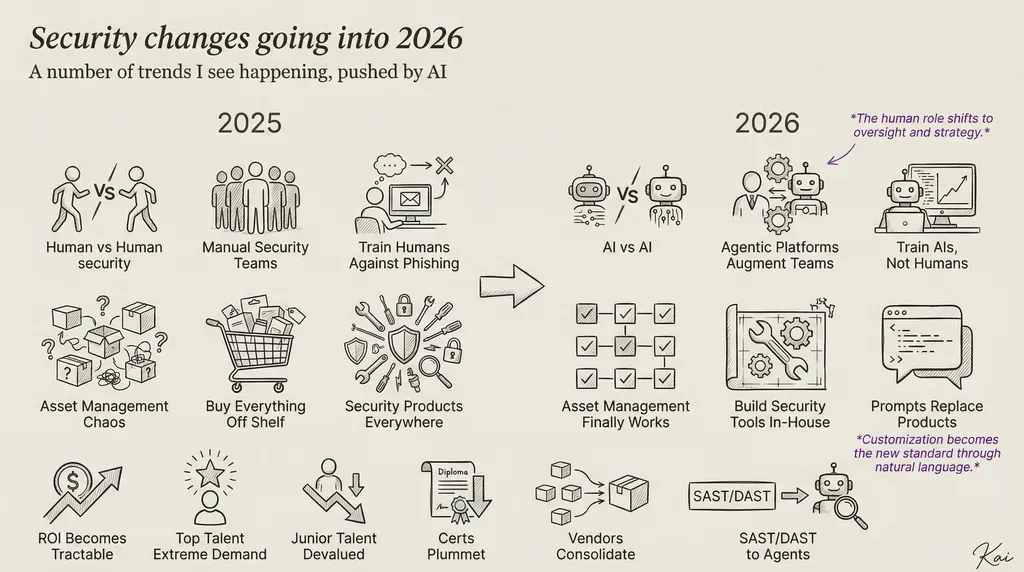

This article could have been written any time in the last few months and still felt accurate. It was about documenting what is a roadmap for how AI is impacting security work, now and in the near future.

I am getting ready to head down to Texas to talk about AI in security at the TEEX conference, so this is a topic I am invested in. One of the biggest challenges I see is that people do not really understand when AI is impactful. Daniel’s post focuses on outcomes. It avoids getting lost in the philosophical debates around AI and instead talks about how it is changing the day-to-day reality of security teams.

Offensive AI vs Defensive AI

Early in the article, Daniel frames things around the idea of offensive AI versus defensive AI, almost like a question of whose AI is better. This is the one place where I slightly disagree with the framing. Told you a was skeptical in the morning.

I do not think we are watching two AIs compete with each other.

On the offensive side, AI is not writing brand new exploits. At least not yet. What it is doing extremely well is interpretation and research of the target. It helps attackers gather better data, tune their messaging, and run cleaner social engineering campaigns. Phishing emails look more legitimate. Fake invoices look more real. Targeting is better because the data gathering is better.

That is very different from what we are seeing on the defensive side.

Defensive AI is about scaling manpower and depth. It is not about suddenly discovering entirely new categories of problems. It is about doing the work we already knew needed to be done but never had the time or people to do well. Daniel hits this perfectly when he talks about AI helping with work that requires a lot of effort. Asset management is a great example and Danial highlights this.

Asset management is not glamorous, but it is constant. Infrastructure changes all the time. Keeping track of what exists, what changed, and whether that change matters is hard work. AI is extremely well suited for that kind of problem, because the logic is soft and the rules are contextual rather than rigid.

Why AI is Bad at Detecting?

One point that is made by not predicting it, is that AI in not going to start detecting new attacks. Security is based on faults and anomalies. A security issue is not the next likely word, it is the opposite.

Large language models do not understand rarity. They operate on likelihood. They generate the most probable next word. That makes them very good at summarizing, explaining, and scaling known patterns. It also means they tend to overlook attacks rather than discover them, unless the data has already been shaped.

This is why tooling matters so much. And this is why AI scales existing solutions to where manpower is having a hard time in implementing solution that require the person to operate in it.

AI works best when data has already been transformed, enriched, and framed. When some of the hard work has already been done. Asset management fits this model perfectly. So do workflows and SOAR-style automation, at least when soft logic is involved.

SOAR, as a category, has largely failed because it tried to automate everything with rigid logic. It works fine for the easiest tasks and the lowest-hanging fruit. The moment things get contextual, it breaks down. That is where LLMs actually help. Not as replacements for systems, but as flexible layers inside structured workflows.

At Fluency, this is why we focus on work flows. It is not about a single agent making a single decision. It is about multiple steps, multiple tools, and structured analysis. Impossible travel analysis is a good example. Projects like LangChain and CrewAI have pushed this idea forward by combining tools with soft logic, and asset management is again a perfect example of where that approach shines.

Hiring, Certifications, and Entry-Level Work

Toward the end of the article, Daniel talks about hiring and the collapse of certifications. I agree with him here, and I think this is one of the most important implications of AI in security. Bummer it was near the end of the blog.

Most certifications are based on rote learning. They encode patterns of how we talk about problems and how we describe solutions. Those patterns map extremely well to what LLMs do naturally. As a result, certifications lose value, not because knowledge is unimportant, but because memorized knowledge is no longer a differentiator.

Hiring is shifting toward skills and results. Can this person actually get something done? Can they implement, evaluate, and improve a system?

What the article does not fully explore is how people enter the field in this new reality. If AI is doing the first pass of analysis and asset tracking, where does the junior analyst fit?

I think the answer is quality assurance.

The entry-level role flips. Instead of humans doing the work and AI checking it, AI does the work and humans evaluate the logic. Did the AI miss something? Did it make an incorrect assumption? Does the result actually make sense in context?

I like to think of it like learning guitar. You do not start by writing songs. You practice scales. You play other people’s music. Over time, you develop intuition. QA works the same way. Reviewing AI output builds judgment, pattern recognition, and real skill.

In this new world, certifications matter less than repetition and exposure.

The Limits of LLMs

One thing not covered in the article, and understandably so, is the long-term limitation of LLMs themselves. This is not something we will solve in 2026. At least one smart person, Yann LeCun, who have defended LLMs are now looking to the next progression.

LLMs do not understand the world. They understand language patterns. That is why they sometimes add unnecessary explanations or extra details that sound reasonable but are incorrect. It is not hallucination in the human sense. It is pattern completion.

Ask an LLM to rotate a cube 90 degrees and it will often add details about edges changing, even though nothing changes except the face you see. The model is following a linguistic pattern, not reasoning through geometry.

There is active work happening on world models and non-linguistic reasoning, but that is not a 2026 story. What we will see in the near term is better use of LLMs through structure, tooling, and workflows. Not a sudden leap in raw reasoning ability.

Final Thoughts

I am still not going to go hunting for prediction blogs. But I am probably going to read the ones people send me, at least now and then. This one was worth it because it focused on the industry I care about and changes I am already seeing firsthand.

And for a prediction blog, that is about the best compliment I can give.

Happy New Year.